Another news report of Tesla's "loss of control" came from China, this time causing 2 deaths and 6 injuries. This year, there have been three incidents in China, and a car rushed into a gas station in Shanghai and injured two people.

Tesla has been reported many times for "suddenly speeding up and losing control". Every time Tesla attempts to prove the impossibility by design principle, but the driver has his own strong opinions. It seems to have become a big unsolved myth. Car owners usually say that they are indeed out of control. Typically the report is, a sudden speed-up horrified the driver who was in a hurry, trying to brake in vain, or had no time to brake it, causing an accident. Tesla usually says that the speeding-up is a feature of autopilot, not a bug. The out-of-control accident is caused by improper operations or carelessness of the driver. In addition, the brakes never fail (unlike Toyota at one time, who admitted the defect of brakes cause failure and had to recall millions). It is always possible for an attentive driver to take over control any time. Tesla fans, who are also car owners, often condemn the perpetrators on Tesla's side, which is not an uncommon phenomenon often seen in discussions on Tesla's Facebook fan club pages.

As a Tesla owner as well as a tech guru who has "played with" Tesla (perhaps the biggest gadget toy in my life) for almost a year, I have to say that both sides have their own reasonable narratives. Indeed, almost every case can finally come down not to a real bug. Technically speaking, there will be no "out-of-control speed-up" even possible in Tesla engineering design. Acceleration is by nature part of the definition for all automatic driving, auto-pilot included. After all, can there be an automatic driving monster, with only deceleration and parking features? So any speed-up can be argued to be an innate feature instead of a bug. Although autopilot belongs to software-controlled hardware operations, hence "bugs" inevitable, however, at least up to now, no one has been able to prove that Tesla's automatic driving has "out-of-control bugs". In fact, "going out-of-control" is probably a false proposition in software engineering, to start with.

However, on the other hand, Tesla drivers clearly know that they "feel" out of control, and there is no need to question that feeling. In fact, Tesla owners all have had such personal experiences, to a different extent. Tesla, as a manufacturer, has its own responsibility for failing to greatly reduce (if not eliminate) a lot of scenarios that make Tesla drivers "feel" out of control. In fact, it's not that they haven't made an effort to address that, but they always (have to) rush new versions online over-the-air (OTA). Due to the incremental nature of software training and engineering in general, Tesla has little time in taking care of the "feelings" of all customers, some being very ignorant of software, thinking the machine simply going crazy when in a panic state. In fact, there is no standard to judge which type of speed is defined as "out-of-control" (speed limit+alpha may be regarded as the absolute upper limit which Tesla never fails to follow). As long as the speed-up is within the pre-set upper limit, any speedup can always be argued to be a feature rather than a bug. Not everyone has the same tolerance level to any surprise changes of speed, so the driver reporting out of control is as real as many people who claim to have seen UFO. The feeling is real, but the felt world is not necessarily the objective world.

I have actually made a serious study on Tesla's reported sudden acceleration issue. Most of them look like a mixture of misunderstanding and illusion, and the loss of control like Toyota's brake failure years ago has not been verified on Tesla. The so-called sudden acceleration might well happen in the following scenarios.

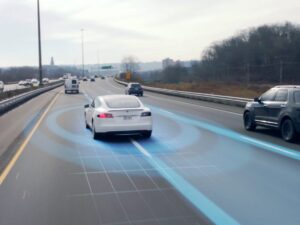

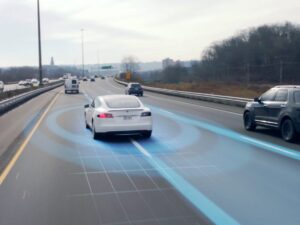

In automatic driving, if the traffic on the road ahead is clear, the car will accelerate until the set speed limit. This is, of course, a feature, not a bug. But in the early Tesla, when the car in front suddenly turned to the neighboring lane, Tesla would accelerate suddenly and quickly, which really made people feel scared and out of control. Later software updates began to control the acceleration pace, taking better care of people's feelings. Those updates effectively reduced the complaints of the "out-of-control" report.

More specifically to Tesla, there are two states of assisted automatic driving at this point, one is called "traffic-aware cruise control", which only controls the speed but not the steering wheel, and the other is so-called "autopilot" (which also controls the steering wheel). Note that there have been clear chimes for entering and exiting from autopilot but up to now, there is no obvious sound prompt for entering or exiting the automatic cruise state (there are signs, though, on the screen, but there are not any alarm sound effects). Sometimes drivers forget that they are in cruise control, especially when they start using the accelerator pedal some time and releasing the foot from the accelerator, which triggers the machine take-over. That scenario can easily lead to the illusion that although they are driving (holding the steering wheel and having used the gas pedal), the car is out of control accelerating by itself! This has happened to me a few times and over time I have learned to get used to this human-machine interaction without panic.

In any case, people can take over the control back at any time. As long as you don't panic, the brakes can stop the acceleration immediately. In addition, there is also automatic emergency braking that kicks in any time when Tesla detects collision risks. However, emergency braking only works in emergency and there are also hidden bugs found in not being able to cover all emergency cases: there will be misjudgments (for example, thinking that the big white truck standing still in front is a normal white cloud on the blue sky, so it will bump right into it; for another known example, when the obstacle in front is a police car parking on the highway roadside that presses the lane instead of a vehicle obstructing the entire lane. Tesla cannot always judge whether to drive clear of such a police car, and numerous such accidents have been reported when netters joke that Tesla loves to challenge police by hitting them. These are the known bugs for the current Tesla autopilot, which cannot prevent collisions 100%.

On the other hand, it seems to be a deliberate design not to give too many prompts as a feature for "seamless human-machine coupling" (interaction and cooperation). Tesla drivers can take over the speed control by stepping on the throttle at any time, regardless of being in automatic cruise control or in autopilot. What needs to be educated as a pre-warning is that as soon as the throttle is released, the machine will take over, and then the machine will speed up within the preset maximum speed limit according to the road conditions. This sudden and seamless takeover of the machine often makes people feel startled and out of control when accelerating more than expected.

In fact, there is a solution to this problem. I don't know why Tesla has not done it properly. Perhaps Tesla overemphasized seamlessness in human-machine coupling and hence tolerated the side effects. The solution is fairly straightforward. First, the entering and exit of automatic cruise should also be given some kind of chime by default, at least this prompt should be made configurable to be set on or off. Drivers should always know whether Tesla is under their own control or it is machine-controlled at any given time without the need to try to figure out. Second, after the machine takes over, even if the road is completely clear and even the adjacent lanes are free of traffic, the acceleration should not be too abrupt, and it should be carried out gradually, considerate of human feelings, not just the objective requirements for safe driving maneuvers. By doing these two things, I believe that the above-mentioned "out-of-control" reports will be greatly reduced. Anyone here is an insider in Tesla? Please help deliver the above suggestions to Tesla to help avoid more complaints, making Tesla safer and more user-friendly.

In fact, phantom braking is a more annoying thing in Tesla than sudden acceleration. It is called phantom because it is usually not easy to determine how it is triggered. In the past, it happened frequently, anything like shadows on the road, direct sunlight, and so on, might cause phantom braking, making people startled and increasing the possibility of rear-end collision. With continuous updating of the re-trained autopilot software on more data, the phantom brake cases began to decrease significantly although it still happens occasionally, and hence there is indeed an adaptation process for preparedness.

Almost a year as a Tesla owner, how often do I "feel out-of-control" in driving Tesla? Phantom braking aside, I have experienced about four or five times of unexpected abrupt speed increase or lane swinging in the 9+ months of driving. Each time when it happens, I feel a little shaken, but with a prepared mindset, I can take control back safely every time. I can imagine, though, what may happen to a newbie with an unprepared mind.

The nature of software engineering is incremental, it is normal to be imperfect and immature, constantly in the state of being in the process. When an immature thing is put into the market, it will inevitably lead to disputes. Amazingly, Elon Musk who is not afraid of taking risks can withstand such disputes involving life and death, still enabling Tesla to stand popular in the stock market. I know part of the reason is the innovation wonder realized in such futuristic products, way ahead of competitions.

Tesla's QA (Quality Assurance) is far from satisfactory, and it definitely does not reach a stringent high standard often seen in software giants in the IT industry. Part of the reason, I guess, is due to their big boss. Musk "whips" engineering team for speed every day. He is known to be a tough boss, placing tons of pressure on the autopilot development, boasting too many times of features which are far from being complete. Under the pressure of such a boss and with the stimulation of stock options, how can programmers have the luxury of pursuing highest-level QA management? Therefore, Tesla's over-the-air software update, pushed as frequently as once every couple of weeks, often takes two steps forward and one step back. Regression "bugs", as well as even the smallest enhancements, are often reported by passionate users everywhere in social media, quite a unique phenomenon in the software world. But in their corporate culture, Tesla can hardly afford to slow down feature development, neither can it afford a long QA process to ensure safety.

I have always loved new gadgets. Tesla is the most recent gadget. To tell the truth, the fun brought by this big toy is completely beyond my imagination. Automatic driving, which is usually beyond reach for customers, is now played within my hands every day, thanks to Tesla's autopilot. I take great fun in driving such a super-computer around in the valley, often purely for testing all the new features and experiencing the feel of real life AI embodied inside Tesla. In fact, the fun becomes greater in finding "bugs" in the process because we then are always looking forward to the next upgrade, hoping the issues to have been resolved, often bringing a full load of surprises, good or bad. l often go online to check which version is the most recent release. I install a new upgrade as soon as I get the notice (I seem to be in the top 5-10% of users who receive upgrades, and often envy those beta testers who are always the first to test a new biggy version). Among the numerous features updates since I owned Tesla are regenerative braking supported single pedal driving (such a joy once we experience the convenience and benefits of single-pedal driving), performance improvement in the automatic lane change, as well as traffic lights responses. The recent upgrade has the function for the automatic window closing on locking, which is also very good, and solved my worry of forgetting to close the windows once and for all. This is another convenience measure for Tesla after its long-standing automatic door opening and locking function.

A process is more important than the result. If it is a perfect robot, a finished product from the future world, I would feel like a fool to sit in inside as it has nothing to do with you, you cannot get engaged, you are just another target to serve. You are you, the vehicle is a vehicle, no different from any other tool we use and forget in life. The current on-going experience is different, we are coupled with the vehicle seamlessly and Tesla does not only look like a live friend, but often gives the feel of ourselves' extension. This kind of "man-machine coupling" proves to be the most fun to a techy guru. No wonder engineers in the valley become the first large wave of Tesla owners. It is unspeakable enjoyment in driving a supercomputer around every day when this machine pops up some "bugs" from time to time (not necessarily the strictly engineering bugs). Although you cannot drill down inside the software to debug, you can evaluate, guess, and imagine how the problem is caused. There are nice incredible surprises, too. For example, autopilot at night and in the storm used to be thought of as the worst nightmare for Tesla, it actually ended up performing exceptionally well. There were two heavy storms I came across on road, which made it very difficult for me to control it myself. I then tried to apply autopilot. As a result, automatic driving turned out to be more stable, slowing down automatically on the lane and sticking inside the lane steadfast. As for night driving in freeway, I found it to be the safest. I bet you can really sleep or nap an extended period of time inside the car with no problem at all when autopilot is on (of course we cannot do that now, it is against the traffic law). One big reason for the night driving safety is that at night, crazy and wild driving behaviors and roaring motorcycles are almost extinct, and everyone follows the rules and focuses on getting back home. Night driving for a human driver is often monotonous and lengthy, and we are prone to fatigue driving. But, autopilot does not know fatigue, there is really no challenge at all. Machines don't necessarily feel the same difficulty when people find it difficult.

thanks to Sougou Translate from https://liweinlp.com/?p=7094

。

。