Wei:

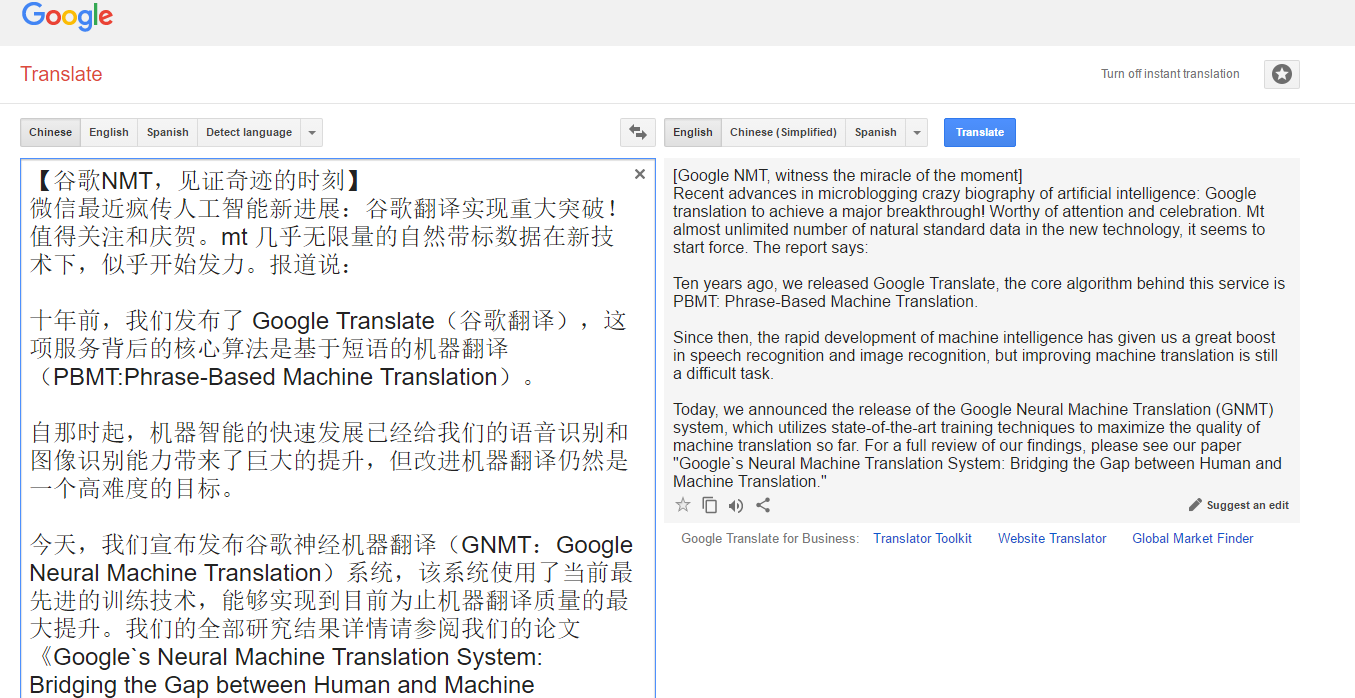

Recently, the microblogging (wechat) community is full of hot discussions and testing on the newest annoucement of the Google Translate breakthrough in its NMT (neural network-based machine translation) offering, claimed to have achieved significant progress in data quality and readability. Sounds like a major breakthrough worthy of attention and celebration.

The report says:

Ten years ago, we released Google Translate, the core algorithm behind this service is PBMT: Phrase-Based Machine Translation. Since then, the rapid development of machine intelligence has given us a great boost in speech recognition and image recognition, but improving machine translation is still a difficult task.

Today, we announced the release of the Google Neural Machine Translation (GNMT) system, which utilizes state-of-the-art training techniques to maximize the quality of machine translation so far. For a full review of our findings, please see our paper "Google`s Neural Machine Translation System: Bridging the Gap between Human and Machine Translation."A few years ago, we began using RNN (Recurrent Neural Networks) to directly learn the mapping of an input sequence (such as a sentence in a language) to an output sequence (the same sentence in another language). The phrase-based machine learning (PBMT) breaks the input sentences into words and phrases, and then largely interprets them independently, while NMT interprets the entire sentence of the input as the basic unit of translation .

A few years ago, we began using RNN (Recurrent Neural Networks) to directly learn the mapping of an input sequence (such as a sentence in a language) to an output sequence (the same sentence in another language). The phrase-based machine learning (PBMT) breaks the input sentences into words and phrases, and then largely interprets them independently, while NMT interprets the entire sentence of the input as the basic unit of translation .The advantage of this approach is that compared to the previous phrase-based translation system, this method requires less engineering design. When it was first proposed, the accuracy of the NMT on a medium-sized public benchmark

The advantage of this approach is that compared to the previous phrase-based translation system, this method requires less engineering design. When it was first proposed, the accuracy of the NMT on a medium-sized public benchmark data set was comparable to that of a phrase-based translation system. Since then, researchers have proposed a number of techniques to improve NMT, including modeling external alignment models to handle rare words, using attention to align input and output words, and word decomposition into smaller units to cope with rare words. Despite these advances, the speed and accuracy of NMT has not been able to meet the requirements of a production system such as Google Translate. Our new paper describes how to overcome many of the challenges of making NMT work on very large data sets and how to build a system that is both fast and accurate enough to deliver a better translation experience for Google users and services.

............

Using side-by-side comparisons of human assessments as a standard, the GNMT system translates significantly better than the previous phrase-based production system. With the help of bilingual human assessors, we found in sample sentences from Wikipedia and the news website that GNMT reduced translational errors by 55% to 85% or more in the translation of multiple major pairs of languages.

In addition to publishing this research paper today, we have also announced that GNMT will be put into production in a very difficult language pair (Chinese-English) translation.

Now, the Chinese-English translations of the Google Translate for mobile and web versions have been translated at 100% using the GNMT machine - about 18 million translations per day. GNMT's production deployment uses our open machine learning tool suite TensorFlow and our Tensor Processing Units (TPUs), which provide sufficient computational power to deploy these powerful GNMT models, meeting Google Translate strict latency requirements for products.

Chinese-to-English translation is one of the more than 10,000 language pairs supported by Google Translate. In the coming months, we will continue to extend our GNMT to far more language pairs.

GNMT translated from Google Translate achieves a major breakthrough!

As an old machine translation researcher, this temptation cannot be resisted. I cannot wait to try this latest version of the Google Translate for Chinese-English.

Previously I tried Google Chinese-to-English online translation multiple times, the overall quality was not very readable and certainly not as good as its competitor Baidu. With this newest breakthrough using deep learning with neural networks, it is believed to get close to human translation quality. I have a few hundreds of Chinese blogs on NLP, waiting to be translated as a try. I was looking forward to this first attempt in using Google Translate for my Science Popularization blog titled Introduction to NLP Architecture. My adventure is about to start. Now is the time to witness the miracle, if miracle does exist.

Dong:

I hope you will not be disappointed. I have jokingly said before: the rule-based machine translation is a fool, the statistical machine translation is a madman, and now I continue to ridicule: neural machine translation is a "liar" (I am not referring to the developers behind NMT). Language is not a cat face or the like, just the surface fluency does not work, the content should be faithful to the original!

Wei:

Let us experience the magic, please listen to this translated piece of my blog:

This is my Introduction to NLP Architecture fully automatically translated by Google Translate yesterday (10/2/2016) and fully automatically read out without any human interference. I have to say, this is way beyond my initial expectation and belief.

Listen to it for yourself, the automatic speech generation of this science blog of mine is amazingly clear and understandable. If you are an NLP student, you can take it as a lecture note from a seasoned NLP practitioner (definitely clearer than if I were giving this lecture myself, with my strong accent). The original blog was in Chinese and I used the newest Google Translate claimed to be based on deep learning using sentence-based translation as well as character-based techniques.

Prof. Dong, you know my background and my original doubtful mindset. However, in the face of such a progress, far beyond our original imagination limits for automatic translation in terms of both quality and robustness when I started my NLP career in MT training 30 years ago, I have to say that it is a dream come true in every sense of it.

Dong:

In their terminology, it is "less adequate, but more fluent." Machine translation has gone through three paradigm shifts. When people find that it can only be a good information processing tool, and cannot really replace the human translation, they would choose the less costly.

Wei:

In any case, this small test is revealing to me. I am still feeling overwhelmed to see such a miracle live. Of course, what I have just tested is the formal style, on a computer and NLP topic, it certainly hit its sweet spot with adequate training corpus coverage. But compared with the pre-NN time when I used both Google SMT and Baidu SMT to help with my translation, this breakthrough is amazing. As a senior old school practitioner of rule-based systems, I would like to pay deep tribute to our "nerve-network" colleagues. These are a group of extremely genius crazy guys. I would like to quote Jobs' famous quotation here:

“Here's to the crazy ones. The misfits. The rebels. The troublemakers. The round pegs in the square holes. The ones who see things differently. They're not fond of rules. And they have no respect for the status quo. You can quote them, disagree with them, glorify or vilify them. About the only thing you can't do is ignore them. Because they change things. They push the human race forward. And while some may see them as the crazy ones, we see genius. Because the people who are crazy enough to think they can change the world, are the ones who do.”

@Mao, this counts as my most recent feedback to the Google scientists and their work. Last time, about a couple of months ago when they released their parser, proudly claimed to be "the most accurate parser in the world", I wrote a blog to ridicule them after performing a serious, apples-to-apples comparison with our own parser. This time, they used the same underlying technology to announce this new MT breakthrough with similar pride, I am happily expressing my deep admiration for their wonderful work. This contrast of my attitudes looks a bit weird, but it actually is all based on facts of life. In the case of parsing, this school suffers from lacking naturally labeled data which they would make use of in perfecting the quality, especially when it has to port to new domains or genres beyond the news corpora. After all, what exists in the language sea involves corpora of raw text with linear strings of words, while the corresponding parse trees are only occasional, artificial objects made by linguists in a limited scope by nature (e.g. PennTree, or other news-genre parse trees by the Google annotation team). But MT is different, it is a unique NLP area with almost endless, high-quality, naturally-occurring "labeled" data in the form of human translation, which has never stopped since ages ago.

Mao: @wei That is to say, you now embrace or endorse a neuron-based MT, a change from your previous views?

Wei:

Yes I do embrace and endorse the practice. But I have not really changed my general view wrt the pros and cons between the two schools in AI and NLP. They are complementary and, in the long run, some way of combining the two will promise a world better than either one alone.

Mao: What is your real point?

Wei:

Despite biases we are all born with more or less by human nature, conditioned by what we have done and where we come from in terms of technical background, we all need to observe and respect the basic facts. Just listen to the audio of their GSMT translation by clicking the link above, the fluency and even faithfulness to my original text has in fact out-performed an ordinary human translator, in my best judgment. If an interpreter does not have sufficient knowledge of my domain, if I give this lecture in a classroom, and ask an average interpreter to translate on the spot for me, I bet he will have a hard time performing better than the Google machine listed above (of course, human translation gurus are an exception). This miracle-like fact has to be observed and acknowledged. On the other hand, as I said before, no matter how deep the learning reaches, I still do not see how they can catch up with the quality of my deep parsing in the next few years when they have no way of magically having access to a huge labeled data of trees they depend on, especially in the variety of different domains and genres. They simply cannot "make bricks without straw" (as an old Chinese saying goes, even the most capable housewife can hardly cook a good meal without rice). Because in the natural world, there are no syntactic trees and structures for them to learn from, there are only linear sentences. The deep learning breakthrough seen so far is still mainly supervised learning, which has almost an insatiable appetite for massive labeled data, forming its limiting knowledge bottleneck.

Mao: I'm confused. Which one do you believe stronger? Who is the world's No. 0?

Wei:

Parsing-wise, I am happy to stay as No. 0 if Google insists on their being No. 1 in the world. As for MT, it is hard to say, from what I see, between their breakthrough and some highly sophisticated rule-based MT systems out there. But what I can say is, at a high level, the trends of the mainstream statistical MT winning the space both in the industry as well as in academia over the old school rule-based MT are more evident today than before. This is not to say that the MT rule system is no longer viable, or going to an end. There are things which SMT cannot beat rule MT. For examples, certain types of seemingly stupid mistakes made by GNMT (quite some laughable examples of totally wrong or opposite translation have been illustrated in this salon in the last few days) are almost never seen in rule-based MT systems.

Dong:

here is my try of GNMT from Chinese to English:

学习上,初二是一个分水岭,学科数量明显增多,学习方法也有所改变,一些学生能及时调整适应变化,进步很快,由成绩中等上升为优秀。但也有一部分学生存在畏难情绪,将心思用在学习之外,成绩迅速下降,对学习失去兴趣,自暴自弃,从此一蹶不振,这样的同学到了初三往往很难有所突破,中考的失利难以避免。

Learning, the second of a watershed, the number of subjects significantly significantly, learning methods have also changed, some students can adjust to adapt to changes in progress, progress quickly, from the middle to rise to outstanding. But there are some students there is Fear of hard feelings, the mind used in the study, the rapid decline in performance, loss of interest in learning, self-abandonment, since the devastated, so the students often difficult to break through the third day,

Mao: This translation cannot be said to be good at all.

Wei:

Right, that is why it calls for an objective comparison to answer your previous question. Currently, as I see, the data for the social media and casual text are certainly not enough, hence the translation quality of online messages is still not their forte. As for the previous textual sample Prof. Dong showed us above, Mao said the Google translation is not of good quality as expected. But even so, I still see impressive progress made there. Before the deep learning time, the SMT results from Chinese to English is hardly readable, and now it can generally be read loud to be roughly understood. There is a lot of progress worth noting here.

Ma:

In the fields with big data, in recent years, DL methods are by leaps and bounds. I know a number of experts who used to be biased against DL have changed their views when seeing the results. However, DL in the IR field is still basically not effective so far, but there are signs of slowly penetrating IR.

Dong:

The key to NMT is "looking nice". So for people who do not understand the original source text, it sounds like a smooth translation. But isn't it a "liar" if a translation is losing its faithfulness to the original? This is the Achille's heel of NMT.

Ma: @Dong, I think all statistical methods have this aching point.

Wei:

Indeed, there are respective pros and cons. Today I have listened to the Google translation of my blog three times and am still amazed at what they have achieved. There are always some mistakes I can pick here and there. But to err is human, not to say a machine, right? Not to say the community will not stop advancing and trying to correct mistakes. From the intelligibility and fluency perspectives, I have been served super satisfactorily today. And this occurs between two languages without historical kinship whatsoever.

Dong:

Some leading managers said to me years ago, "In fact, even if machine translation is only 50 percent correct, it does not matter. The problem is that it cannot tell me which half it cannot translate well. If it can, I can always save half the labor, and hire a human translator to only translate the other half." I replied that I am not able to make a system do that. Since then I have been concerned about this issue, until today when there is a lot of noise of MT replacing the human translation anytime from now. It's kinda like having McDonald's then you say you do not need a fine restaurant for French delicacy. Not to mention machine translation today still cannot be compared to McDonald's. Computers, with machine translation and the like, are in essence a toy given by God for us human to play with. God never agrees to permit us to be equipped with the ability to copy ourselves.

Why GNMT first chose language pairs like Chinese-to-English, not the other way round to showcase? This is very shrewd of them. Even if the translation is wrong or missing the points, the translation is usually fluent at least in this new model, unlike the traditional model who looks and sounds broken, silly and erroneous. This is the characteristics of NMT, it is selecting the greatest similarity in translation corpus. As a vast number of English readers do not understand Chinese, it is easy to impress them how great the new MT is, even for a difficult language pair.

Wei:

Correct. A closer look reveals that this "breakthrough" lies more on fluency of the target language than the faithfulness to the source language, achieving readability at cost of accuracy. But this is just a beginning of a major shift. I can fully understand the GNMT people's joy and pride in front of a breakthrough like this. In our career, we do not always have that type of moment for celebration.

Deep parsing is the NLP's crown. Yet to see how they can beat us in handling domains and genres lacking labeled data. I wish them good luck and the day they prove they make better parsers than mine would be the day of my retirement. It does not look anything like this day is drawing near, to my mind. I wish I were wrong, so I can travel the world worry-free, knowing that my dream has been better realized by my colleagues.

Thanks to Google Translate at https://translate.google.com/ for helping to translate this Chinese blog into English, which was post-edited by myself.

[Related]

Wei’s Introduction to NLP Architecture Translated by Google

"OVERVIEW OF NATURAL LANGUAGE PROCESSING"

"NLP White Paper: Overview of Our NLP Core Engine"

Introduction to NLP Architecture

It is untrue that Google SyntaxNet is the "world’s most accurate parser"

Announcing SyntaxNet: The World’s Most Accurate Parser Goes Open

Is Google SyntaxNet Really the World’s Most Accurate Parser?