Translator's note:

This article written in Chinese by Prof. S. Bai is a wonderful piece of writing worthy of recommendation for all natural language scholars. Prof. Bai's critical study of Chomsky's formal language theory with regards to natural language has reached a depth never seen before ever since Chomsky's revolution in 50's last century. For decades with so many papers published by so many scholars who have studied Chomsky, this novel "caterpillar" theory still stands out and strikes me as an insight that offers a much clearer and deeper explanation for how natural language should be modeled in formalism, based on my decades of natural language parsing study and practice (in our practice, I call the caterpillar FSA++, an extension of regular grammar formalism adequate for multi-level natural language deep parsing). For example, so many people have been trapped in Chomsky's recursion theory and made endless futile efforts to attempt a linear or near-linear algorithm to handle the so-called recursive nature of natural language which is practically non-existent (see Chomsky's Negative Impact). There used to be heated debates in computational linguistics on whether natural language is context-free or context-sensitive, or mildly sensitive as some scholars call it. Such debates mechanically apply Chomsky's formal language hierarchy to natural languages, trapped in metaphysical academic controversies, far from language facts and data. In contrast, Prof. Bai's original "caterpillar" theory presents a novel picture that provides insights in uncovering the true nature of natural languages.

S. Bai: Natural Language Caterpillar Breaks through Chomsky's Castle

Tags: Chomsky Hierarchy, computational linguistics, Natural Language Processing, linear speed

This is a technology-savvy article, not to be fooled by the title seemingly about a bug story in some VIP's castle. If you are neither an NLP professional nor an NLP fan, you can stop here and do not need to continue the journey with me on this topic.

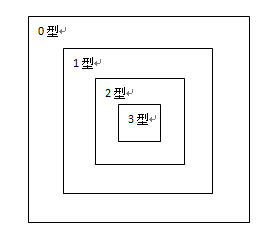

Chomsky's Castle refers to the famous Chomsky Hierarchy in his formal language theory, built by the father of contemporary linguistics Noam Chomsky more than half a century ago. According to this theory, the language castle is built with four enclosing walls. The outmost wall is named Type-0, also called Phrase Structure Grammar, corresponding to a Turing machine. The second wall is Type-1, or Context-sensitive Grammar (CSG), corresponding to a parsing device called linear bounded automaton with time complexity known to be NP-complete. The third wall is Type-2, or Context-free Grammar (CFG), corresponding to a pushdown automaton, with a time complexity that is polynomial, somewhere between square and cubic in the size of the input sentence for the best asymptotic order measured in the worst case scenario. The innermost wall is Type-3, or Regular Grammar, corresponding to deterministic finite state automata, with a linear time complexity. The sketch of the 4-wall Chomsky Castle is illustrated below.

This castle of Chomsky has impacted generations of scholars, mainly along two lines. The first line of impact can be called "the outward fear syndrome". Because the time complexity for the second wall (CSG) is NP-complete, anywhere therein and beyond becomes a Forbidden City before NP=P can be proved. Thus, the pressure for parsing natural languages has to be all confined to within the third wall (CFG). Everyone knows the natural language involves some context sensitivity, but the computing device cannot hold it to be tractable once it is beyond the third wall of CFG. So it has to be left out.

The second line of impact is called "the inward perfection syndrome". Following the initial success of using Type 2 grammar (CFG) comes a severe abuse of recursion. When the number of recursive layers increases slightly, the acceptability of a sentence soon approximates to almost 0. For example, "The person that hit Peter is John" looks fine, but it starts sounding weird to hear "The person that hit Peter that met Tom is John". It becomes gibberish with sentences like "The person that hit Peter that met Tom that married Mary is John". In fact, the majority resources spent with regards to the parsing efficiency are associated with such abuse of recursion in coping with gibberish-like sentences, rarely seen in real life language. For natural language processing to be practical, pursuing the linear speed cannot be over emphasized. If we reflect on the efficiency of the human language understanding process, the conclusion is certainly about the "linear speed" in accordance with the length of the speech input. In fact, the abuse of recursion is most likely triggered by the "inward perfection syndrome", for which we intend to cover every inch of the land within the third wall of CFG, even if it is an area piled up by gibberish or garbage.

In a sense, it can be said that one reason for the statistical approach to take over the rule-based approach for such a long time in the academia of natural language processing is just the combination effect of these two syndromes. To overcome the effects of these syndromes, many researchers have made all kinds of efforts, to be reviewed below one by one.

Along the line of the outward fear syndrome, evidence against the context-freeness has been found in some constructions in Swiss-German. Chinese has similar examples in expressing respective correspondence of conjoined items and their descriptions. For example, “张三、李四、王五的年龄分别是25岁、32岁、27岁,出生地分别是武汉、成都、苏州” (Zhang San, Li Si, Wang Wu's age is respectively 25, 32, and 27, they were born respectively in Wuhan, Chengdu, Suzhou" ). Here, the three named entities constitute a list of nouns. The number of the conjoined list of entities cannot be predetermined, but although the respective descriptors about this list of nouns also vary in length, the key condition is that they need to correspond to the antecedent list of nouns one by one. This respective correspondence is something beyond the expression power of the context-free formalism. It needs to get out of the third wall.

As for overcoming "the inward perfection syndrome", the pursuit of "linear speed" in the field of NLP has never stopped. It ranges from allowing for the look-ahead mechanism in LR (k) grammar, to the cascaded finite state automata, to the probabilistic CFG parsers which are trained on a large treebank and eventually converted to an Ngram (n=>5) model. It should also include RNN/LSTM for its unique pursuit for deep parsing from the statistical school. All these efforts are striving for defining a subclass in Type-2 CFG that reaches linear speed efficiency yet still with adequate linguistic power. In fact, all parsers that have survived after fighting the statistical methods are to some degree a result of overcoming "the inward perfection syndrome", with certain success in linear speed pursuit while respecting linguistic principles. The resulting restricted subclass, compared to the area within the original third wall CFG, is a greatly "squashed" land.

If we agree that everything in parsing should be based on real life natural language as the starting point and the ultimate landing point, it should be easy to see that the outward limited breakthrough and the inward massive compression should be the two sides of a coin. We want to strive for a formalism that balances both sides. In other words, our ideal natural language parsing formalism should look like a linguistic "caterpillar" breaking through the Chomsky walls in his castle, illustrated below:

It seems to me that such a "caterpillar" may have already been found by someone. It will not take too long before we can confirm it.

Original article in Chinese from 《穿越乔家大院寻找“毛毛虫”》

Translated by Dr. Wei Li

【Related】

K. Church: A Pendulum Swung Too Far, Linguistics issues in Language Technology, 2011; 6(5)