In my article "Pride and Prejudice of Main Stream", the first myth listed as top 10 misconceptions in NLP is as follows:

[Hand-crafted Myth] Rule-based system faces a knowledge bottleneck of hand-crafted development while a machine learning system involves automatic training (implying no knowledge bottleneck).

While there are numerous misconceptions on the old school of rule systems , this hand-crafted myth can be regarded as the source of all. Just take a review of NLP papers, no matter what are the language phenomena being discussed, it's almost cliche to cite a couple of old school work to demonstrate superiority of machine learning algorithms, and the reason for the attack only needs one sentence, to the effect that the hand-crafted rules lead to a system "difficult to develop" (or "difficult to scale up", "with low efficiency", "lacking robustness", etc.), or simply rejecting it like this, "literature [1], [2] and [3] have tried to handle the problem in different aspects, but these systems are all hand-crafted". Once labeled with hand-crafting, one does not even need to discuss the effect and quality. Hand-craft becomes the rule system's "original sin", the linguists crafting rules, therefore, become the community's second-class citizens bearing the sin.

So what is wrong with hand-crafting or coding linguistic rules for computer processing of languages? NLP development is software engineering. From software engineering perspective, hand-crafting is programming while machine learning belongs to automatic programming. Unless we assume that natural language is a special object whose processing can all be handled by systems automatically programmed or learned by machine learning algorithms, it does not make sense to reject or belittle the practice of coding linguistic rules for developing an NLP system.

For consumer products and arts, hand-craft is definitely a positive word: it represents quality or uniqueness and high value, a legit reason for good price. Why does it become a derogatory term in NLP? The root cause is that in the field of NLP, almost like some collective hypnosis hit in the community, people are intentionally or unintentionally lead to believe that machine learning is the only correct choice. In other words, by criticizing, rejecting or disregarding hand-crafted rule systems, the underlying assumption is that machine learning is a panacea, universal and effective, always a preferred approach over the other school.

The fact of life is, in the face of the complexity of natural language, machine learning from data so far only surfaces the tip of an iceberg of the language monster (called low-hanging fruit by Church in K. Church: A Pendulum Swung Too Far), far from reaching the goal of a complete solution to language understanding and applications. There is no basis to support that machine learning alone can solve all language problems, nor is there any evidence that machine learning necessarily leads to better quality than coding rules by domain specialists (e.g. computational grammarians). Depending on the nature and depth of the NLP tasks, hand-crafted systems actually have more chances of performing better than machine learning, at least for non-trivial and deep level NLP tasks such as parsing, sentiment analysis and information extraction (we have tried and compared both approaches). In fact, the only major reason why they are still there, having survived all the rejections from mainstream and still playing a role in industrial practical applications, is the superior data quality, for otherwise they cannot have been justified for industrial investments at all.

the “forgotten” school: why is it still there? what does it have to offer? The key is the excellent data quality as advantage of a hand-crafted system, not only for precision, but high recall is achievable as well.

quote from On Recall of Grammar Engineering Systems

In the real world, NLP is applied research which eventually must land on the engineering of language applications where the results and quality are evaluated. As an industry, software engineering has attracted many ingenious coding masters, each and every one of them gets recognized for their coding skills, including algorithm design and implementation expertise, which are hand-crafting by nature. Have we ever heard of a star engineer gets criticized for his (manual) programming? With NLP application also as part of software engineering, why should computational linguists coding linguistic rules receive so much criticism while engineers coding other applications get recognized for their hard work? Is it because the NLP application is simpler than other applications? On the contrary, many applications of natural language are more complex and difficult than other types of applications (e.g. graphics software, or word processing apps). The likely reason to explain the different treatment between a general purpose programmer and a linguist knowledge engineer is that the big environment of software engineering does not involve as much prejudice while the small environment of NLP domain is deeply biased, with belief that the automatic programming of an NLP system by machine learning can replace and outperform manual coding for all language projects. For software engineering in general, (manual) programming is the norm and no one believes that programmers' jobs can be replaced by automatic programming in any time foreseeable. Automatic programming, a concept not rare in science fiction for visions like machines making machines, is currently only a research area, for very restricted low-level functions. Rather than placing hope on automatic programming, software engineering as an industry has seen a significant progress on work of the development infrastructures, such as development environment and a rich library of functions to support efficient coding and debugging. Maybe in the future one day, applications can use more and more of automated code to achieve simple modules, but the full automation of constructing any complex software project is nowhere in sight. By any standards, natural language parsing and understanding (beyond shallow level tasks such as classification, clustering or tagging) is a type of complex tasks. Therefore, it is hard to expect machine learning as a manifestation of automatic programming to miraculously replace the manual code for all language applications. The application value of hand-crafting a rule system will continue to exist and evolve for a long time, disregarded or not.

"Automatic" is a fancy word. What a beautiful world it would be if all artificial intelligence and natural languages tasks could be accomplished by automatic machine learning from data. There is, naturally, a high expectation and regard for machine learning breakthrough to help realize this dream of mankind. All this should encourage machine learning experts to continue to innovate to demonstrate its potential, and should not be a reason for the pride and prejudice against a competitive school or other approaches.

Before we embark on further discussions on the so-called rule system's knowledge bottleneck defect, it is worth mentioning that the word "automatic" refers to the system development, not to be confused with running the system. At the application level, whether it is a machine-learned system or a manual system coded by domain programmers (linguists), the system is always run fully automatically, with no human interference. Although this is an obvious fact for both types of systems, I have seen people get confused so to equate hand-crafted NLP system with manual or semi-automatic applications.

Is hand-crafting rules a knowledge bottleneck for its development? Yes, there is no denying or a need to deny that. The bottleneck is reflected in the system development cycle. But keep in mind that this "bottleneck" is common to all large software engineering projects, it is a resources cost, not only introduced by NLP. From this perspective, the knowledge bottleneck argument against hand-crafted system cannot really stand, unless it can be proved that machine learning can do all NLP equally well, free of knowledge bottleneck: it might be not far from truth for some special low-level tasks, e.g. document classification and word clustering, but is definitely misleading or incorrect for NLP in general, a point to be discussed below in details shortly.

Here are the ballpark estimates based on our decades of NLP practice and experiences. For shallow level NLP tasks (such as Named Entity tagging, Chinese segmentation), a rule approach needs at least three months of one linguist coding and debugging the rules, supported by at least half an engineer for tools support and platform maintenance, in order to come up with a decent system for initial release and running. As for deep NLP tasks (such as deep parsing, deep sentiments beyond thumbs-up and thumbs-down classification), one should not expect a working engine to be built up without due resources that at least involve one computational linguist coding rules for one year, coupled with half an engineer for platform and tools support and half an engineer for independent QA (quality assurance) support. Of course, the labor resources requirements vary according to the quality of the developers (especially the linguistic expertise of the knowledge engineers) and how well the infrastructures and development environment support linguistic development. Also, the above estimates have not included the general costs, as applied to all software applications, e.g. the GUI development at app level and operations in running the developed engines.

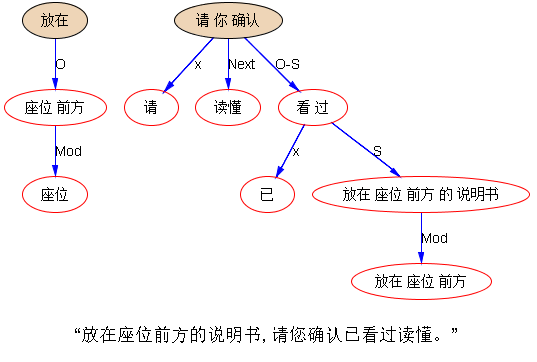

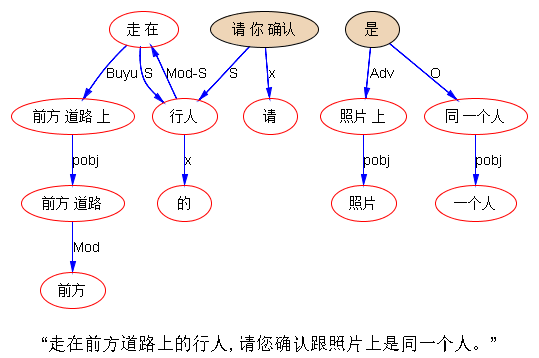

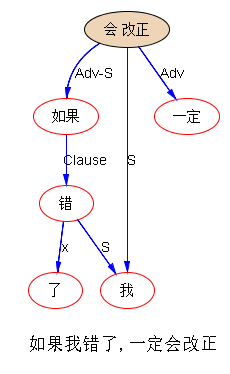

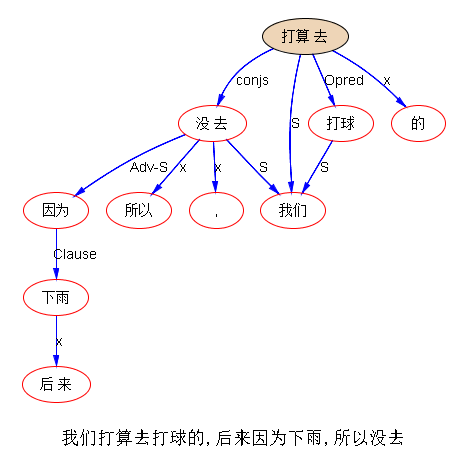

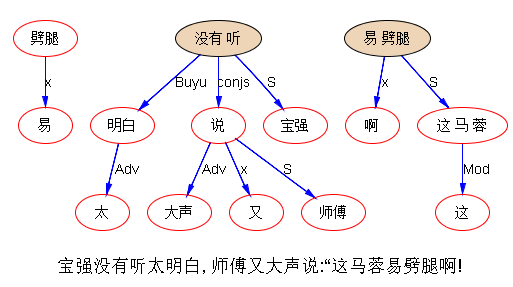

Let us present the scene of the modern day rule-based system development. A hand-crafted NLP rule system is based on compiled computational grammars which are nowadays often architected as an integrated pipeline of different modules from shallow processing up to deep processing. A grammar is a set of linguistic rules encoded in some formalism, which is the core of a module intended to achieve a defined function in language processing, e.g. a module for shallow parsing may target noun phrase (NP) as its object for identification and chunking. What happens in grammar engineering is not much different from other software engineering projects. As knowledge engineer, a computational linguist codes a rule in an NLP-specific language, based on a development corpus. The development is data-driven, each line of rule code goes through rigid unit tests and then regression tests before it is submitted as part of the updated system for independent QA to test and feedback. The development is an iterative process and cycle where incremental enhancements on bug reports from QA and/or from the field (customers) serve as a necessary input and step towards better data quality over time.

Depending on the design of the architect, there are all types of information available for the linguist developer to use in crafting a rule’s conditions, e.g. a rule can check any elements of a pattern by enforcing conditions on (i) word or stem itself (i.e. string literal, in cases of capturing, say, idiomatic expressions), and/or (ii) POS (part-of-speech, such as noun, adjective, verb, preposition), (iii) and/or orthography features (e.g. initial upper case, mixed case, token with digits and dots), and/or (iv) morphology features (e.g. tense, aspect, person, number, case, etc. decoded by a previous morphology module), (v) and/or syntactic features (e.g. verb subcategory features such as intransitive, transitive, ditransitive), (vi) and/or lexical semantic features (e.g. human, animal, furniture, food, school, time, location, color, emotion). There are almost infinite combinations of such conditions that can be enforced in rules’ patterns. A linguist’s job is to code such conditions to maximize the benefits of capturing the target language phenomena, a balancing art in engineering through a process of trial and error.

Macroscopically speaking, the rule hand-crafting process is in its essence the same as programmers coding an application, only that linguists usually use a different, very high-level NLP-specific language, in a chosen or designed formalism appropriate for modeling natural language and framework on a platform that is geared towards facilitating NLP work. Hard-coding NLP in a general purpose language like Java is not impossible for prototyping or a toy system. But as natural language is known to be a complex monster, its processing calls for a special formalism (some form or extension of Chomsky's formal language types) and an NLP-oriented language to help implement any non-toy systems that scale. So linguists are trained on the scene of development to be knowledge programmers in hand-crafting linguistic rules. In terms of different levels of languages used for coding, to an extent, it is similar to the contrast between programmers in old days and the modern software engineers today who use so-called high-level languages like Java or C to code. Decades ago, programmers had to use assembly or machine language to code a function. The process and workflow for hand-crafting linguistic rules are just like any software engineers in their daily coding practice, except that the language designed for linguists is so high-level that linguistic developers can concentrate on linguistic challenges without having to worry about low-level technical details of memory allocation, garbage collection or pure code optimization for efficiency, which are taken care of by the NLP platform itself. Everything else follows software development norms to ensure the development stay on track, including unit testing, baselines construction and monitoring, regressions testing, independent QA, code reviews for rules' quality, etc. Each level language has its own star engineer who masters the coding skills. It sounds ridiculous to respect software engineers while belittling linguistic engineers only because the latter are hand-crafting linguistic code as knowledge resources.

The chief architect in this context plays the key role in building a real life robust NLP system that scales. To deep-parse or process natural language, he/she needs to define and design the formalism and language with the necessary extensions, the related data structures, system architecture with the interaction of different levels of linguistic modules in mind (e.g. morpho-syntactic interface), workflow that integrate all components for internal coordination (including patching and handling interdependency and error propagation) and the external coordination with other modules or sub-systems including machine learning or off-shelf tools when needed or felt beneficial. He also needs to ensure efficient development environment and to train new linguists into effective linguistic "coders" with engineering sense following software development norms (knowledge engineers are not trained by schools today). Unlike the mainstream machine learning systems which are by nature robust and scalable, hand-crafted systems' robustness and scalability depend largely on the design and deep skills of the architect. The architect defines the NLP platform with specs for its core engine compiler and runner, plus the debugger in a friendly development environment. He must also work with product managers to turn their requirements into operational specs for linguistic development, in a process we call semantic grounding to applications from linguistic processing. The success of a large NLP system based on hand-crafted rules is never a simple accumulation of linguistics resources such as computational lexicons and grammars using a fixed formalism (e.g. CFG) and algorithm (e.g. chart-parsing). It calls for seasoned language engineering masters as architects for the system design.

Given the scene of practice for NLP development as describe above, it should be clear that the negative sentiment association with "hand-crafting" is unjustifiable and inappropriate. The only remaining argument against coding rules by hands comes down to the hard work and costs associated with hand-crafted approach, so-called knowledge bottleneck in the rule-based systems. If things can be learned by a machine without cost, why bother using costly linguistic labor? Sounds like a reasonable argument until we examine this closely. First, for this argument to stand, we need proof that machine learning indeed does not incur costs and has no or very little knowledge bottleneck. Second, for this argument to withstand scrutiny, we should be convinced that machine learning can reach the same or better quality than hand-crafted rule approach. Unfortunately, neither of these necessarily hold true. Let us study them one by one.

As is known to all, any non-trivial NLP task is by nature based on linguistic knowledge, irrespective of what form the knowledge is learned or encoded. Knowledge needs to be formalized in some form to support NLP, and machine learning is by no means immune to this knowledge resources requirement. In rule-based systems, the knowledge is directly hand-coded by linguists and in case of (supervised) machine learning, knowledge resources take the form of labeled data for the learning algorithm to learn from (indeed, there is so-called unsupervised learning which needs no labeled data and is supposed to learn from raw data, but that is research-oriented and hardly practical for any non-trivial NLP, so we leave it aside for now). Although the learning process is automatic, the feature design, the learning algorithm implementation, debugging and fine-tuning are all manual, in addition to the requirement of manual labeling a large training corpus in advance (unless there is an existing labeled corpus available, which is rare; but machine translation is a nice exception as it has the benefit of using existing human translation as labeled aligned corpora for training). The labeling of data is a very tedious manual job. Note that the sparse data challenge represents the need of machine learning for a very large labeled corpus. So it is clear that knowledge bottleneck takes different forms, but it is equally applicable to both approaches. No machine can learn knowledge without costs, and it is incorrect to regard knowledge bottleneck as only a defect for the rule-based system.

One may argue that rules require expert skilled labor, while the labeling of data only requires high school kids or college students with minimal training. So to do a fair comparison of the costs associated, we perhaps need to turn to Karl Marx whose "Das Kapital" has some formula to help convert simple labor to complex labor for exchange of equal value: for a given task with the same level of performance quality (assuming machine learning can reach the quality of professional expertise, which is not necessarily true), how much cheap labor needs to be used to label the required amount of training corpus to make it economically an advantage? Something like that. This varies from task to task and even from location to location (e.g. different minimal wage laws), of course. But the key point here is that knowledge bottleneck challenges both approaches and it is not the case believed by many that machine learning learns a system automatically with no or little cost attached. In fact, things are far more complicated than a simple yes or no in comparing the costs as costs need also to be calculated in a larger context of how many tasks need to be handled and how much underlying knowledge can be shared as reusable resources. We will leave it to a separate writing for the elaboration of the point that when put into the context of developing multiple NLP applications, the rule-based approach which shares the core engine of parsing demonstrates a significant saving on knowledge costs than machine learning.

Let us step back and, for argument's sake, accept that coding rules is indeed more costly than machine learning, so what? Like in any other commodities, hand-crafted products may indeed cost more, they also have better quality and value than products out of mass production. For otherwise a commodity society will leave no room for craftsmen and their products to survive. This is common sense, which also applies to NLP. If not for better quality, no investors will fund any teams that can be replaced by machine learning. What is surprising is that there are so many people, NLP experts included, who believe that machine learning necessarily performs better than hand-crafted systems not only in costs saved but also in quality achieved. While there are low-level NLP tasks such as speech processing and document classification which are not experts' forte as we human have much more restricted memory than computers do, deep NLP involves much more linguistic expertise and design than a simple concept of learning from corpora to expect superior data quality.

In summary, the hand-crafted rule defect is largely a misconception circling around wildly in NLP and reinforced by the mainstream, due to incomplete induction or ignorance of the scene of modern day rule development. It is based on the incorrect assumption that machine learning necessarily handles all NLP tasks with same or better quality but less or no knowledge bottleneck, in comparison with systems based on hand-crafted rules.

Note: This is the author's own translation, with adaptation, of part of our paper which originally appeared in Chinese in Communications of Chinese Computer Federation (CCCF), Issue 8, 2013

[Related]

Domain portability myth in natural language processing

Pride and Prejudice of NLP Main Stream

K. Church: A Pendulum Swung Too Far, Linguistics issues in Language Technology, 2011; 6(5)

Wintner 2009. What Science Underlies Natural Language Engineering? Computational Linguistics, Volume 35, Number 4

Pros and Cons of Two Approaches: Machine Learning vs Grammar Engineering

Overview of Natural Language Processing

Dr. Wei Li’s English Blog on NLP