【立委按】大疫当前,学问缓行,尤其是非主流的“学问”。此集可算是“闭关隔离”的副产品吧,绝对小众。然而,就题论题,本体体系结构的话题却的确是涉及AI知识系统核心的基本话题,虽然体味个中滋味者不为多数。

白:“颜色”与“红”的关系,和“红”与“大红、深红、砖红、朱红、玫瑰红…”的关系,是一样的吗?

颜色是属性名,红是属性值,大红、深红、砖红、朱红、玫瑰红…是细化属性值。属性值-细化属性值的关系,和属性名-属性值的关系,要区别对待。

李:那自然。本体知识库(ontology), 如 HowNet(《知网》), 就是这么区分的。属性值属于逻辑形容词,有自己的 taxonomy (上下位链条)。属性名属于逻辑名词,其直接上位是抽象名词。属性名与其相应的属性值是一一对应的关系。如,【味道】 与 “酸甜苦辣” ,【气味】与“香、臭 …”,【形状】 与 “方、圆、菱形、三角、多角 …”,等等。

白:那还有没有“属性”这个语义范畴?另外就是“宿主”挂在谁那儿?挂在属性名?属性值?还是属性?

李:【属性】可以置于 【颜色】、【形状】 … 与 【抽象名词】 之间。

白:我不是这个意思。我是说,既然分别有了属性名和属性值,那还需要有属性么?如果不需要,那宿主跟谁?

李:逻辑形容词(属性值|AttributeValue)属于逻辑谓词(Predicate),谓词挖坑。

白:这就是不要属性了。

李:【形状】类逻辑形容词挖坑,要求【物体】。所以,本体上可以说,“桌子是圆的”;不能说,“思想是圆的”。违反常识本体的,只能是比喻:“圆的思想”,真要说的话,大概是指思想的 “圆滑、中庸”。

白:这个案件的凶手是张三,杀害李四的凶手是张三,其凶手是张三。“凶手”是“角色名”,能否得到“属性名”的类似待遇?

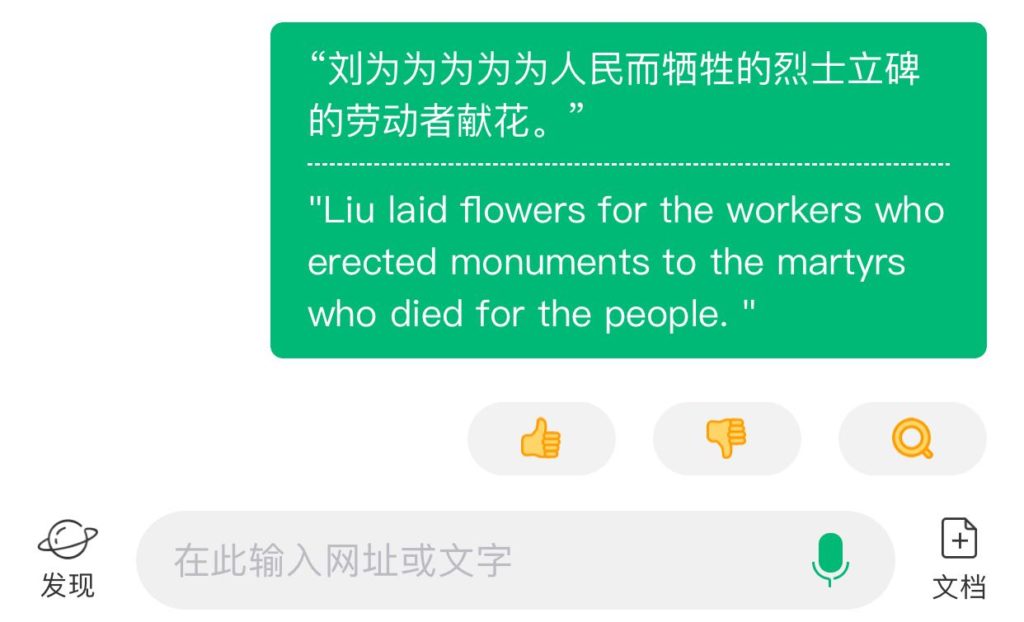

N/X,当X匹配动词萝卜时,是具体事件在填坑;在X匹配名词萝卜时,是抽象事件在填坑。抽象事件是具体事件的指称性概括。具体事件还可以进行陈述性概括。红之于大红,就是陈述性概括;颜色之于红,就是指称性概括。is-a无法区分指称性概括和陈述性概括。

李:那就是两条线上的 ISA,两个不同角度,所以一个儿子应该允许有两个或多个老子。本体知识库中的taxonomy理应如此,可以认定其中一个是主链条,其他的是附加链条。

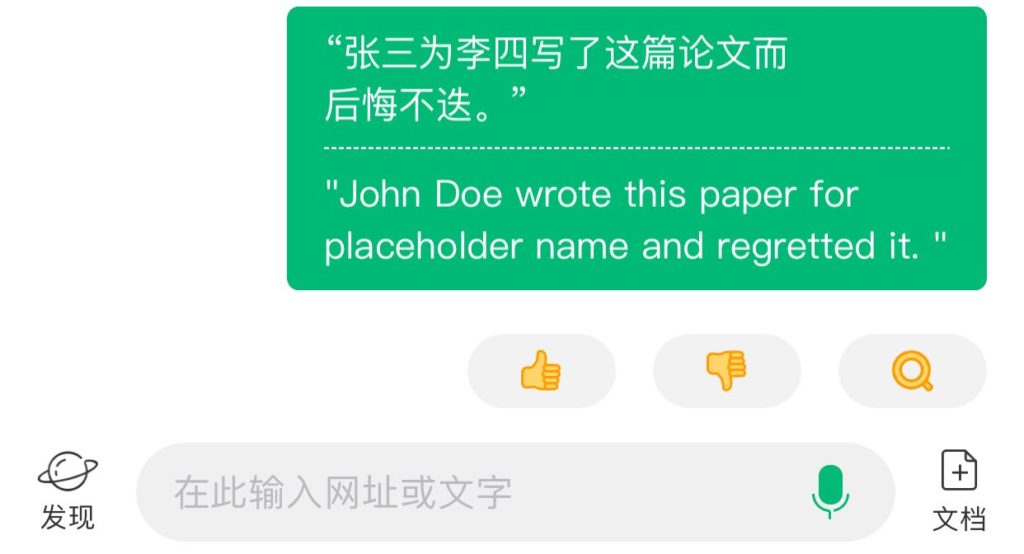

“凶手” 与 “张三” 的对应,与【属性名】与【属性值】的对应不同。前者是种类(角色)与专名的关系,都属于逻辑名词。后者是逻辑名词与逻辑形容词的 “跨类别” 对应关系。

白:“这张桌子的颜色是红的。”

桌子究竟填“颜色”还是“红”还是两者都填?“这张桌子的颜色有点红。” 填谁?桌子填颜色,颜色填红?还是桌子填both?

如果颜色的坑是“物体PhysicalObject”,红的坑也是,那么颜色就无法填红的坑。大小S就有问题。

“象鼻子有点长”vs.“桌子颜色有点红”

“象鼻子长度有点长”,虽然啰嗦但并不违反语法。

“桌子腿儿颜色有点红”,部件和属性名,句法地位类似,语义上还有问题。桌子腿儿,输出直接就是另一个对象了。颜色,输入是物体,输出是属性名;红,输入是物体,输出是属性值。属性,应该是<属性名,属性值>二元组。红的输入不是属性名。这个二元组之一可以default,如果从值到名不混淆的话。但是“红”还有“人气高”的意思,跟另一个属性名“人气”还勾勾搭搭。于是,“这个歌手有点红”,虽然歌手也是物体,但更是公众人物。

李:小s “颜色” 是大 S “桌子腿儿” 的 aspect,谓词“有点红”要求大S填坑,与小s构成总分对应关系;句法上,小s类似于 PP 状语“论color”。

白:腿儿是中s,桌子是大s。

李:由于总分的对应关系属于常识,是记忆在静态的本体知识中,因此小s实际上是冗余信息。这就说明了为什么我们觉得 “桌子腿儿颜色有点红” 啰嗦,其实际语义就是“桌子腿儿有点红”。

白:是值到名映射单一,不用看是不是常识。“颜色不太对劲儿”当中的“颜色”就不能省。

李:值到名映射单一,就是常识,是最简单的分类型常识:红是一种颜色。这种常识在 ontology 中是必须表达的,没有新意,不具有情报性。

白:这是系统自身就能确定的事实,不需要借助于常识机制。

李:总之都在 ontology 里面。前者是概念分类,属于事实,毋庸置疑;后者是趋向性常识,常有例外。【动物】吃【食物】,例外是,“某个动物吃了石头”。

白:把“红”换成“深”试试:这张桌子腿儿颜色深。“深”的坑,谁来填?“这张桌子腿儿颜色过深”,“这张桌子腿儿颜色太深”。“这张桌子腿儿颜色过红”,“这张桌子腿儿颜色太红”,红的坑,谁来填?

属性名和值同时出现在一个合法的句子里,是绕不过去的。总要说明白,是怎么填坑的。“这张桌子腿儿颜色太红”语义上不等于“这张桌子腿儿太红”。不仅可以是“红的程度太甚”而且还可以是“太偏向于红”。

在ontology里,不仅要给名和值各自以相应的地位,还要给它们的坑赋予相应的本体标签。

李:“颜色”分“深浅”,“腿儿”分“高矮、粗细”。

白:高矮是“高度”这个属性名下的值,粗细是“径向大小”这个属性名下的值。说起来跟红和颜色的关系都一样,不能厚此薄彼。

李:【属性名】、【属性值】、【物体】是个三角关系。可以利用【属性名】作为桥梁,具有概括性。但是,【属性名】与【物体】的对应是静态本体知识,没有情报性,属于ontology里面对 schema 定义中的 type appropriateness 的描述。而【属性值】填的萝卜,是动态的知识,这才属于语言理解得出的情报。

白:“颜色深”就有情报了,深不是属性值。“颜色花哨”“颜色怯”“颜色俗”都不是属性值,都有情报性。

李:这是层次纠缠。“深”、“浅”、“花哨”、“俗”、“怯”,当然也是【属性值】

白:不是具体颜色,怎么说是属性值?

李:【属性值】的萝卜对象不应该只局限于【物体】呀。这儿其萝卜不再是具体【物体】,而是抽象的东西,即【物体】的一个侧面【颜色】。再如,【程度】、【垂直高度】等抽象概念也一样论“深浅”的。

白:等等,宿主都变了。是对颜色的comments。垂直高度是属性值。

李:具体的垂直高度,譬如“深、浅、高、矮”是【属性值】,但“垂直高度”本身则是【属性名】。

白:这里的逻辑不顺,满是问题。

李:没有问题。如果误读,那是层次纠缠的干扰。

逻辑名词分为具体与抽象,深浅描述的对象是抽象名词。

白:“逻辑名词”并不是本体概念吧。

李:是。是本体概念链条处于顶端(TOP)的一个节点。

白:董老师叫“万物”。

李:对,董老师命名为 【thing|事物】,下面有【抽象】,也有【具体】,【具体】下面有【PhysicalObject】。【thing】,我称之为逻辑名词,属于跨语言的本体概念。

白:在本体文献里不常见。

李:叫什么名不重要,实质就是,语言的POS各有不同,但背后的概念本体其实也有对应的东西。【thing】 就是【逻辑n】,【AttributeValue】 就是【逻辑a】

白:“行为”算不算thing?“红”算不算thing?

“红是一种象征革命的颜色”,“那是一种非常大气的红”。

李:不算,【行为】是【逻辑v】,“红”是【ColorValue】,属于【逻辑a】。上例的“红”是逻辑形容词到了具体语言以后名物化,成为名词了,但本体概念是恒定不变的,依然是【逻辑a】。

白:eat算不算“行为”的实例?

李:eat 算【行为】,是【逻辑v】。到了具体语言以后,譬如英语,“eating”借助形态 -ing,就是 thing了,属于抽象名词。

白:不应该是两个义原。处理为两个义原会遇到很多问题,一定是一个。

李:对,本体里面不应该是两个义原,因此“eat”作为概念,永远是逻辑动词,“红”永远是逻辑形容词。有个简单的办法解决这个问题,词典里面的本体分类是一个,但句法分类可以变换。这种变换在形态语言里面用的是形态,非形态语言可用零形式根据上下文来决定。eat 作为概念就是逻辑v,但在具体语言使用中成为词的时候,可能是 POS N 或者 POS V。

白:可以往指称和陈述两个方向概括,所以thing是指称性概括的总根子。下面通过抽象事件、抽象性质、抽象行为,可以平滑过渡到具体事件、具体性质、具体行为。陈述性概括的总根子,应该也有一个。

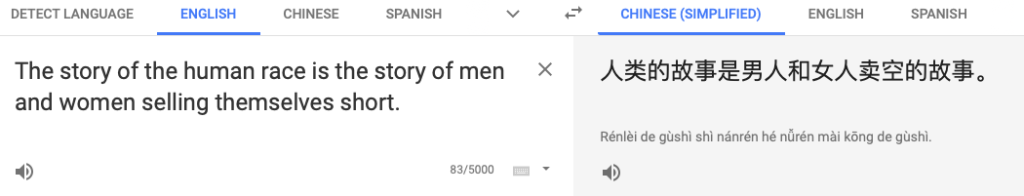

eat通过“行为”也会被thing罩住才对。正如“红”通过“颜色”也会被thing罩住。指称和陈述的分叉口是通过句法提供的。底下是一套人马,上面是两块牌子。不做成这样,会有巨大的麻烦。句法结构强制,可以分叉。范畴词(属性名)也可以分叉。

李:对呀。异曲同工,英雄所见,大原则都是相同的,具体表示(representation)层面的技术性差异而已。

白:“红这种颜色在中国股市里表示涨,在西方股市里表示跌”。

范畴词就是命名ontology非叶子节点的词。

李:范畴词,软件界叫做“保留字”。保留字是元概念,但自然语言也有实际的词汇对应它。

从表示手段看,只要允许一个儿子有两个老子,ontology 就好办了。

白:DAG

李:对。“红”的上位是【ColorValue】,属于【AttributeValue】 (我叫它逻辑a);“红”的另一个上位是【颜色|Color】。

白:万物,其中就包括事件,这是根本。

李:也许这要问爱因斯坦了 LOL

白:就好像,程序的代码也是数据,打冯诺依曼起就这么玩儿了。程序的执行是另一回事儿。事件的发生/展开/执行,也是另一回事儿。

李:纯粹从形式表示看,不过就是个命名问题。假设【万物】就是唯一的 TOP,TOP 下面还是 n a v。

白:n a v 并不是TOP的直接子节点。颜色下面才有红,行为下面才有eat。颜色、行为,我这里叫“范畴词”,往上都是【万物】,往下才分n v a,范畴词是分叉点。

李:也行,也许更自然。其实不过是原来 n下面的抽象子类中的成分,被提升了一个节点而已,让它们作为桥梁通向TOP。不觉得有本质变化,需要用到的一子多父的链条都在。

【颜色】(ColorValue)、【形状】、【外貌】、【品性】等等与TOP之间,应该有一个节点 叫 AttributeValue (逻辑a)。链条大体是这样的:

红 --》 颜色 --》attribute --〉TOP

英俊 --》外貌 --》attribute --〉TOP

eat --》action --》behaviour --〉TOP

桌子 --》家具 --》产品类 --》物体 --》具体 --》thing --〉TOP

白:对。

李:换汤不换药。在我看来,顶部概念有三,thing 就是逻辑n,behaviour 就是逻辑v,attribute(i.e. AttributeValue) 就是逻辑a。

白:问题是,下面的这些边并不都是is-a

李:至于在他们上面再加一个 TOP 统领,那是一个哲学的观点,不是一个实用的需求。

白:不是的。不是这么统领的。属性、状态、关系相互独立,但都有可能是形容词。

李:红 --》 ColorValue --》AttributeValue(逻辑a) --》Predicate(谓词);红 --》Color --》AbstractObject --》thing(逻辑n)

就是让“红”有两个老子:ColorValue 是属性名,属于逻辑a;而 Color 是属性类,属于逻辑n,

白:这盏灯很亮,说的是属性。这盏灯真亮,说的是状态。

李:为什么要这么细分?

白:作为属性的亮,说的只是某种定性或定量的亮度指标,但灯并不必然处于点亮状态。作为状态的亮,是真实点亮了的,其亮度是可感的。后者可以推出灯的点亮状态,前者不能。不是说有两个“亮”的词条或义原,而是说往上走有两个岔路。通往属性和通往状态各有不同。

李:就句子论句子,多数人没有这种区分吧。“很”、“真”都是程度副词。

白:不好说。多数人还没语法概念呢,但是该区分的都区分了。可以设计问卷。我相信多数人都推的出。

李:顺便说一句,30年前董老师刚开始酝酿 HowNet 的时候,我们正好在中关村的高立公司有半年多在一起做项目,董老师谈 HowNet 的设想。当时对董老师主张的逻辑语义以及本体知识网络这些学问觉得特别有兴趣。可是回到宿舍在纸面上想把思路理清楚,很快就觉得跌入了语义学的深渊,一头雾水。很快产生一种畏难情绪。那时刚入行,历练不够,实践也不足,只得知难而退了.... 30年后算是坐享其成了。

白:我不认为hownet可以达到让人坐享其成的程度了。

李:哈 各人要求不同吧。前面提到对颜色、属性之类 HowNet 都 encode 进去了。还有很多很细的分类和梳理的工作以及体系建设。我觉得是自成体系、自圆其说,比较 comprehensive 的了。

白:这都是应有之义,但远远不够。作为数字版的辞书,很好。作为可计算的基础设施,还有改进的空间。

李:自然可以改造使用。举个例子。

hownet 把 Attribute 与 AttributeValue 分开,命名方式一致,但是是两个岔。这在客观上造成了 features 数量 double 了的结果。在采用的过程中,觉得虽然这样一来【属性名】(逻辑名词)与【属性值】(逻辑形容词)从 category 角度是分清楚了,但是多了这么多 features 感觉不必要,累赘。于是做了如下变通:

颜色:color category

红色:color attribute

二者共用了 color 这个 feature。其区分可以用 features 之间的 AND 来表示。如果照搬 HowNet,词典标注大体是这样的:

颜色:ColorAttribute

红色:Color

至于 ColorAttribute 与 Color 的对应关系,那是在 HowNet 内部联系的(除了命名的助记效果外)。经过改造,都用 color 以后,这种关系就直接体现在词典的 features 中了。

好处是 HowNet 基本上把概念梳理出来了,这里不过是做了实用主义的技术性改造。一个语言的词汇表中,表示 atrribute value 的逻辑形容词,远远多于 表示其种类的词。有几百个表示颜色的词,但“颜色”本身只有一两个词(“颜色”、“色彩”)。为后者另外命名一套 features 感觉很不合算,也不方便。

白:本来就是某种意义上的“上位”。

李:一个是直接来一条竖线串联下来,而董老师是中间插了一条横线,等价于一个儿子两个老子,表示手段不同。一横一竖也不是没有道理。毕竟 “玫瑰红” 到 “红” 的 ISA 关系 与 “红” 到 “颜色” 的 ISA 不是同类的上下位。

白:值与名的关系不是严格意义上的上下位关系。横过来当然是可以的。这是指称性和陈述性的分叉,与一般的多爹不同。

李:实际应用中,这种区分没什么必要。何况需要区分的时候,还是有办法区分,多一个feature(而不是重复几十个平行的对应 features)就齐了。

白:看是谁家的实际应用了。

“陈述性义原的指称性概括”,或者叫“跨域上位”。

李:对,跨类、跨层次的关联。这就好比概念与“元”概念的区别一样。一不留神,可以引起层次纠缠。可以说“红太阳”、“白太阳”,甚至“黑太阳”,不能说 “颜色太阳”。(搁文革时候,提“红太阳、白太阳、黑太阳”,立马打成现行反革命呢,绝对逃不过的。时代还是进步了。)

白:动词也有同样的问题

另外就是同样在名词这边,角色名,是从属于事件的,是事件折射回来的。比如一开始提到的“凶手”。“凶手”从属于“杀人”事件,杀人事件可以跨域追溯到上位名词“案件”,于是“凶手”也可以间接从属于“案件”。也就是说,一个具体事件的坑,可以被它的上位抽象事件所继承。

李:“杀人” 有个【agent】 坑,这个坑的 type 是 【human】,如果细分的话,就是 【凶手】。

【凶手】 --》【肇事者】--》human

“杀人”(名物化)指的是一种案件,案件是事件,“杀人”的坑里面的萝卜,自然就是案件/事件中的角色。

白:我不认为HowNet在这些方面提供了ready的计算机制。

李:有空可以细看一下,我觉得 HowNet 做得很细,应该把“案件”与“杀人” 关联了,“杀人”与“凶手”关联了。至于这些关联的计算机制,有什么函数可以调用,这些方面,HowNet 的确不强,至少是不好用。

白:问题杀人是动词,案件/事件是名词,跨域了。如果不揭示这个动词到名词的概括关系,就没有理由给名词挖一个关联的坑。

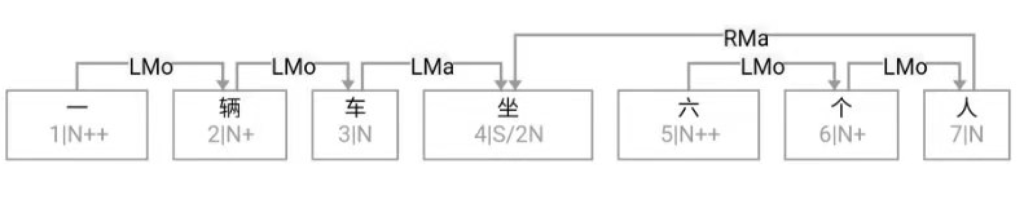

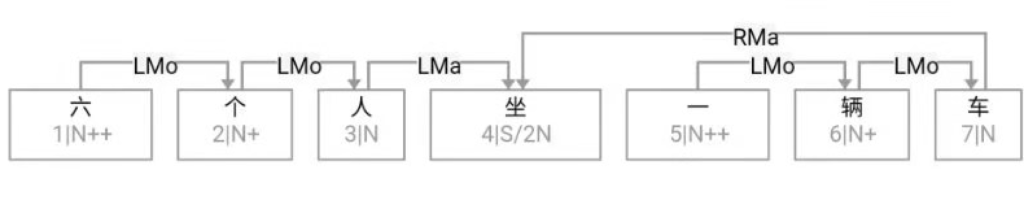

我们的处理,“凶手”是N/X,分母上的X填动词萝卜时,就是具体事件的角色名;填名词萝卜时,就是抽象事件的角色名。角色名和属性名,应该用类似机制处理,这才简明好用。在实例层面能做到,和在表示层面、机制层面能做到是不一样的。“凶手”是agent的实例,或者某个下位。

李:对。类似的角色还有“受害者”、“受益人”,甚至“施事”、“受事”、“对象”这些词所对应的概念。这些都是元概念,恰好与逻辑语义系统内部的角色定义【施事】、【受事】等相交了。

查“凶手”的定义:凶手乃是杀人之施事。“刺客” 是 “凶手” 的细分,“凶手”是“施事”的细分。

白:我们叫范畴词,颜色、长度、程度……,也都是。

引入了名-值体系,对ontology意味着什么?我看到的是:打开了横向跨域关联的通道。不仅“事件”本身可以通向“万物”,就连配属于“事件”的“角色”也可以通向“万物”,在其中找到概括自己的抽象物。这才符合冯诺依曼的原则:程序也是数据。

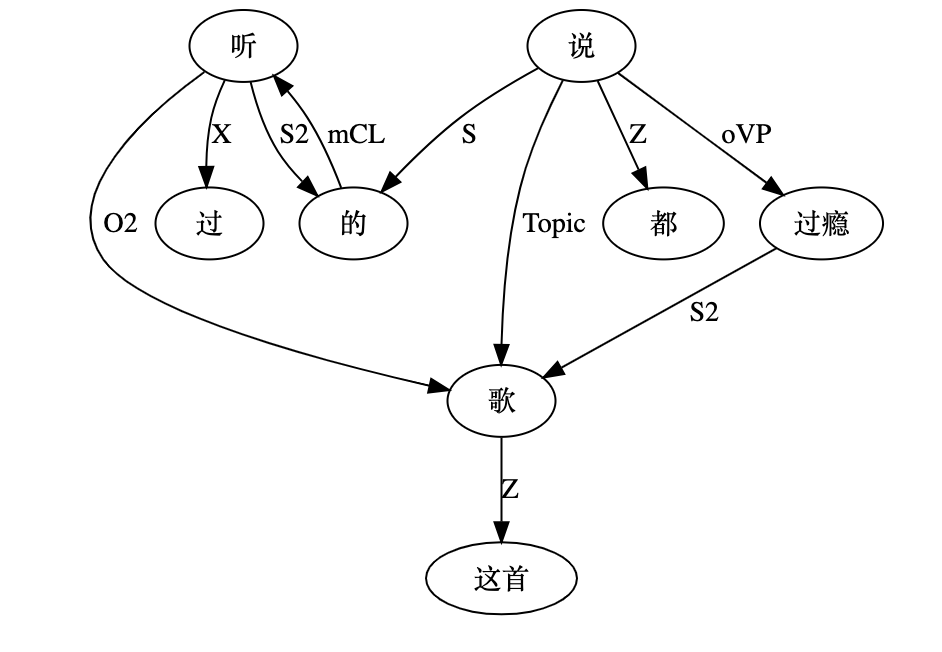

实际上,细化属性值(如“砖红、翠绿”等)很少直接作谓语,更多的是作定语,也就是说用法类似北大体系里的“区别词”。修饰语对被修饰语的要求与约束,与“坑”属于同一个数据类型。与“坑”不同的是,它会在修饰语与被修饰语的结合中,把这些要求和约束传递给被修饰语,这个传递过程有点像“合一(unification)”。作为一个特例,当修饰语升格时,这些要求和约束会传递给作为升格结果的那个零成分。

“坑”本身也有要求和约束,在填坑时反向传递给萝卜,萝卜如果可以再复用,这些反向接受来的标签可以像自己从词典里带来的标签一样使用。比如“我吃的”,会从坑里接收Food标签。

我们把修饰语携带的对潜在被修饰语要求和约束的标签集合体称为“修饰目标”,生造了一个词Modee。一个修饰语,自身有一套语义标签,又为修饰目标准备了一套语义标签。自身的语义标签是双轨制的:一方面指向自己的上位属性值,另一方面指向自己的对应属性名。上位属性值仍在谓词领域,属性名就跨越到了体词领域。这不同于一般的多爹,是跨域多爹。

李:修饰目标与谓词目标(逻辑主语)在形式逻辑上是统一的,都是挖了一个目标坑,期望同一类型的萝卜。

乔姆斯基句法也做了类似本体语义的抽象,谓语的句法主语与名词的定语,被认为都处于所谓 specifier 的 position,具有某种结构同质性。

作为典型的修饰语,形容词就是挖了这么个需要主语或被修饰语的坑。至于形容词中的子类“区别词”(“男”、“女”等),不能做谓语,只能做修饰语,那是语言内部的某种句法约束习惯,不是逻辑语义层面的约束。区别词在中文不能做谓语,并不代表在其他语言不能做谓语。但无论做谓语还是修饰语,其本体对于目标的要求是跨语言的。世界语中,就可以说:

la vir-o est-as vir-a

(the man is male)

la vir-o vir-as

(the man male-s)

说明:世界语形态中,-o 是名词,-a 是形容词,-as 是谓语(现在时)。这些都是本体外语言层面的东西。

这样看来,应该用另一个feature或其他方式来区别谓语和修饰语,而在本体逻辑层面它们都是是同一个挖坑者(谓词)。

作为逻辑谓词的形容词,在句法结构中做了谓语还是修饰语,是本体静态知识外的情报。这种由句法结构而来的信息区分,也是语义理解的一部分,也必须在语义结构的解析图中反映出来,但并不影响谓词挖坑者对目标萝卜的本体约束方面的统一要求。

白:N+和S/N究竟使用哪一个标签,可以在词法阶段解决,也可以在句法阶段解决。这不是问题。

作补语和作定语就不一样:喝 好酒 vs 喝好 酒

李:形式逻辑比较粗暴或粗陋,前者就是:喝(酒)& 好(酒);后者则是:好(喝(酒))。当然不同。不同的原因是“好” 太广谱:挖的坑针对的目标可以是体词,也可以是谓词。

白:“红 太阳”也好,“太阳 红”也好,“红”所对应的句法标签“N+”和“S/N”可以同时存在,交给句法去选择。语义标签则是同一套。“太阳 红”中如果“红”取N+会导致dead end。而“红 太阳”中,修饰操作优先级本来就高于填坑操作。所以选择下来没难度。词典里语义标签是同一套数据。但在构建N+成分和S/N成分时,标签数据的装载方式略有不同。

李:词典信息给多个约束 features 是自然的,本来这些信息也都是针对潜在关系对象的。等到实现的时候(走了一个结构路径的时候),另一条路往往自然堵死。

白:相反的情况是走了一条死路时,活路的优先级自然处于当前最高位。只要分析过程中用过的成分允许再用。

李:词典信息中的语义本体的挖坑信息( semantic expectation features )应该是同一的,是句法的句型信息(syntactic subcat features)各有不同。expectation 与 subcat 是两套,是对于遣词造句的两个不同维度的约束条件。前者是跨语言的约束,后者才是具体语言的约束。所谓基于知识的解析(knowledge-based parsing),说到底就是从 semantic expectation 出发,看语言如何以某个 syntactic subcat pattern 来实现。因为人就是这么从思想外化为语句的,深度解析再现了这个逆过程,平衡了句法和语义两个维度 的约束。

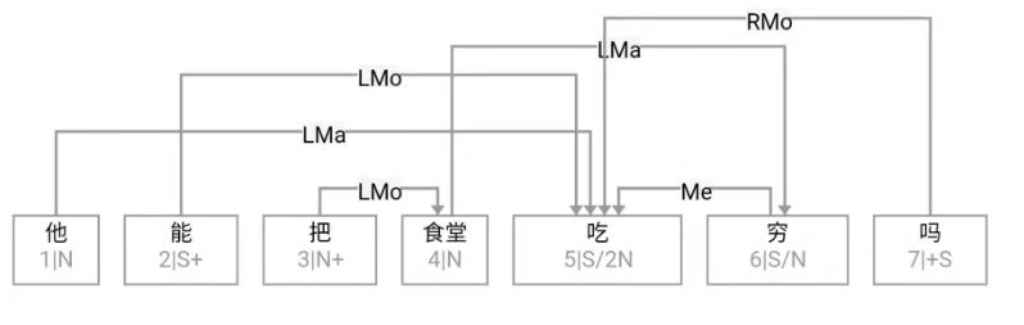

白:形容词是二元化处理(N+和S/N),动词是一元化处理(S/*)。动词作定语通过降格实现,而且是白名单制,需要特定条件满足才激活。

李:中文经典结构歧义案例是:“学习文件”,“炒鸡蛋”。从本体约束看,“文件” 是可以被“学习”的,才可以做被修饰语(即NP的中心词),表示是“(所/要)学习(的)文件”。

白:炒鸡蛋当菜名可以,炒苏北草鸡蛋当菜名就差意思了。

李:如果是 “学习 x”,则不可能是定中结构,只能是动宾结构。因为 x 未定,没有本体信息,作为白名单不满足萝卜的约束。但它却在黑名单之外,符合要求。

同理,“炒 x”只能是动宾。如果是 “炒【food】”,在中文就歧义了。

白:扩展的food也不灵,只能是动宾。

李:这种结构歧义,源于本体语义对于arg的坑与对于 modee 的坑,约束完全相同,而恰好句法的词序约束(动在宾前,定在中前)也同时符合两种结构的要求。为了消歧,必须在本体约束外找细琐的条件或heuristics,譬如音节数pattern(2-2pattern,1-2pattern更多是定中结构,2以上的N趋向于是动宾结构),是否开放还是可以死记(定中结构作为菜名,开放性不如动宾结构),以及萝卜本身的结构特点(是裸萝卜N 还是穿衣的萝卜 Noun Group:有定语特别是长定语的N,差不多都是宾语),等等。

白:死记和开放有不同的优先级。

【相关】